performanceTest_2

thanks @yocoo Provide Tencent cloud host

machine A: Bifrost + insertDataTest client

CPU:4C Memory:8G

machine B: MySQL

CPU:4C Memory:8G Disk:1000G

machine C: ClickHouse

CPU:4C Memory:8G Disk:1000G

Version:

Bifrost Version:v1.6.2-release

MySQL Version: 5.7.30-log

ClickHouse Version : 20.8.3.18

insertDataTest Version : 1.6.2

1. Preparation

1).download Bifrost source, and build insertDataTest

git clone -b https://github.com/brokercap/Bifrost.git ./BifrostV1.6.x

cd BifrostV1.6.x/test

go build ./insertDataTest.go

2).create bifrost_test for mysql and clickhouse

MySQL table structure(5 table)

CREATE TABLE `binlog_field_test_5` ( `id` BIGINT(20) UNSIGNED NOT NULL AUTO_INCREMENT, `testtinyint` TINYINT(4) NOT NULL DEFAULT '-1', `testsmallint` SMALLINT(6) NOT NULL DEFAULT '-2', `testmediumint` MEDIUMINT(8) NOT NULL DEFAULT '-3', `testint` INT(11)) NOT NULL DEFAULT '-4', `testbigint` BIGINT(20) NOT NULL DEFAULT '-5', `testvarchar` VARCHAR(400) NOT NULL, `testchar` CHAR(2) NOT NULL, `testenum` ENUM('en1','en2','en3') NOT NULL DEFAULT 'en1', `testset` SET('set1','set2','set3') NOT NULL DEFAULT 'set1',`testtime` TIME NOT NULL DEFAULT '00:00:01', `testdate` DATE NOT NULL DEFAULT '1970-01-01', `testyear` YEAR(4) NOT NULL DEFAULT '1989', `testtimestamp` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP, `testdatetime` DATETIME NOT NULL DEFAULT '1970-01-01 00:00:00',

`testfloat` FLOAT(9,2) NOT NULL DEFAULT '0.00', `testdouble` DOUBLE(9,2) NOT NULL DEFAULT '0.00', `testdecimal` DECIMAL(9,2) NOT NULL DEFAULT '0.00', `testtext` TEXT NOT NULL, `testblob` BLOB NOT NULL, `testbit` BIT(64) NOT NULL DEFAULT b'0', `testbool` TINNYINT(1) NOT NULL DEFAULT '0', `testmediumblob` MEDIUMBLOB NOT NULL, `testlongblob` LONGBLOB NOT NULL, `testtinyblob` TINYBLOB NOT NULL, `test_unsinged_tinyint` TINYINT(4) UNSIGNED NOT NULL DEFAULT '1', `test_unsinged_smallint` SMALLINT(6) UNSIGNED NOT NULL DEFFAULT '2', `test_unsinged_mediumint` MEDIUMINT(8) UNSIGNED NOT NULL DEFAULT '3', `test_unsinged_int` INT(11) UNSIGNED NOT NULL DEFAULT '4', `test_unsinged_bigint` BIGINT(20) UNSIGNED NOT NULL DEFAULT '5', PRIMARY KEY (`id`) ) ENGINE=MyISAM AUTO_INCREMENT=40000000000 DEFAULT CHARSET=utf8;

ClickHouse table structure (5 ClikcHouse table)

CREATE TABLE binlog_field_test_5 ( `id` UInt64, `testtinyint` Int8, `testsmallint` Int16, `testmediumint` Int32, `testint` Int32, `testbigint` Int64, `testvarchar` String, `testchar` String, `testenum` String, `testset` String, `testtime` String, `testdate` Date, `testyear` Int16, `testtimestamp` DateTime, `testdatetime` DateTime, `testfloat` Float64, `testdouble` Float64, `testdecimal` String, `testtext` String, `testblob` String, `testbit` Int64, `testbool` Int8, `testmediumblob` String, `testlongblob` String, `testtinyblob` String, `test_unsinged_tinyint` UInt8, `test_unsinged_smallint` UInt16, `test_unsinged_mediumint` UInt32, `test_unsinged_int` UInt32, `test_unsinged_bigint` UInt64, `binlog_event_type` String, `bifrost_data_version` Int64 ) ENGINE = MergeTree() ORDER BY id

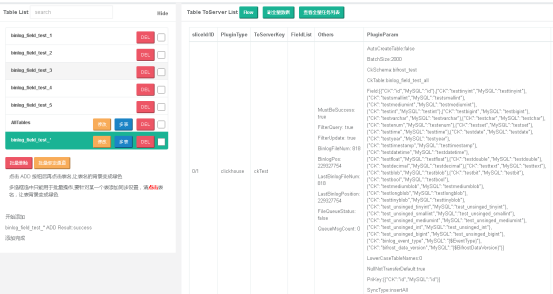

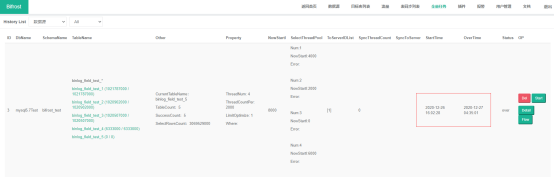

2. Increment test

2.1 Start

1000/100ms/connection ; default 2 connection

nohup ./insertDataTest -host 10.0.3.31 -user xxtest -pwd xxtest -schema bifrost_test -table binlog_field_test_1 -count 1000000000 -batchsize 1000 -time_interval 100 >> ./1.log

nohup ./insertDataTest -host 10.0.3.31 -user xxtest -pwd xxtest -schema bifrost_test -table binlog_field_test_2 -count 1000000000 -batchsize 1000 -time_interval 100 >> ./2.log

nohup ./insertDataTest -host 10.0.3.31 -user xxtest -pwd xxtest -schema bifrost_test -table binlog_field_test_3 -count 1000000000 -batchsize 1000 -time_interval 100 >> ./3.log

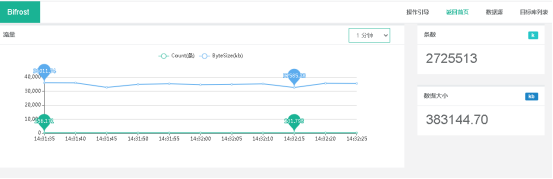

The parsing speed is about 2.7 million per minute, with the size of 380mb

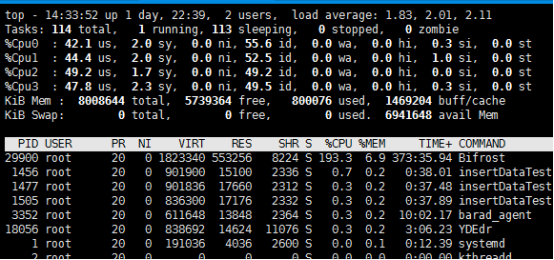

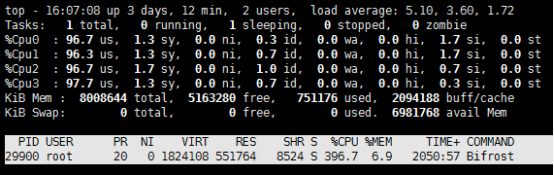

Hardware usage

Memory: 550M

CPU: 210%

After synchronizing 620 million data, we tried to write 10 million data to the fourth table to see if the sites could keep up. As a result, the sites began to widen, and the gap was almost 200 million, and gradually widened. The amount and size of data processed per minute did not increase, indicating that the current 2.7 million / min and 380 MB / min are the upper limit of the current hardware configuration. When the bit difference is about 100s, the data brushing process of the fourth table is suspended

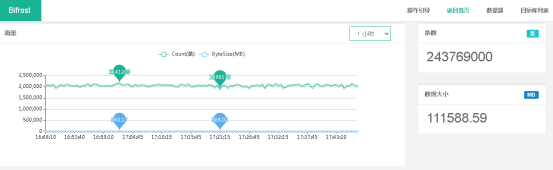

2.2 Result

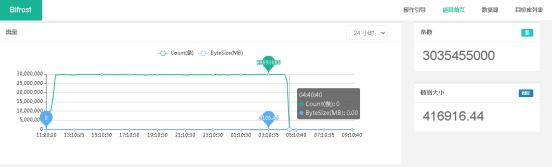

大约花费 17.5 个小时同步完30亿数据,平均约速率约为 295万条/分钟,389M/分钟 It takes about 17.5 hours to synchronize 3 billion pieces of data, with an average rate of about 2.95 million pieces / minute and 389m pieces / minute

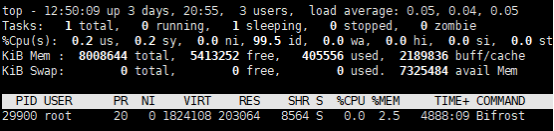

硬件使用情况

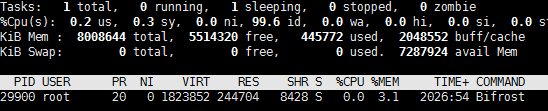

The synchronization is completed, and the actual use of Bifrost is reduced to 240m

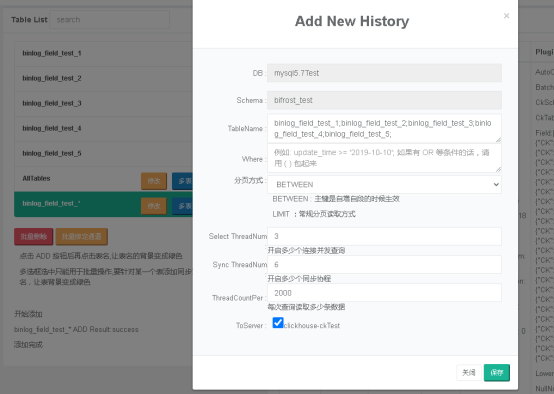

3. Full test

3.1 Start

Because the full configuration supports the number of threads that pull data and synchronize data, we will use binlog here for convenience_ field_ test_ 1,binlog_ field_ test_ 2,binlog_ field_ test_ 3. Synchronize the data of three tables to binlog in Clickhouse_ field_ test_ It’s in the same watch

select thead count: 3

sync to clickhouse thread count: 6

CPU : 400%

Memroy : 550M

3.2 Result

total: 3069529000

use 12 hours 33 minutes

4,076,399/minute

After full synchronization, CPU and memory return to normal state

4. Result

| Increment | Full | |

| Tabel Count | 3 | 3 |

| Data Count | 3069529000 | 3069529000 |

| Select/Parse Thread Count | 1 | 3 |

| Sync Thread Count | 3 | 6 |

| Max CPU Uasge | 220% | 400% |

| Max Memory Use | < 1G | < 1G |

| Use time | 17 hours 30 minutes | 12 hours 33 minutes |

| Data Count/minute | 2,923,360 | 4,076,399 |